Authors:

(1) Busra Tabak [0000 −0001 −7460 −3689], Bogazici University, Turkey {[email protected]};

(2) Fatma Basak Aydemir [0000 −0003 −3833 −3997], Bogazici University, Turkey {[email protected]}.

Table of Links

- Abstract and Introduction

- Background

- Approach

- Experiments and Results

- Discussion and Qualitative Analysis

- Related Work

- Conclusions and Future Work, and References

5 Discussion and Qualitative Analysis

In this section, we focus on the validity of threats that could impact the reliability and generalizability of our study results. We discuss potential sources of bias, confounding variables, and other factors that may affect the validity of our study design, data collection, and analysis. We also describe our experiment for user evaluation in the company, which is aimed at investigating the effectiveness of our approach for issue assignment. We explain the methodology we use to gather feedback from users, such as surveys or interviews, and how we plan to analyze the results.

5.1 Threats to Validity

In this section, we discuss the validity threats to our study concerning internal validity, external validity, construct validity, and conclusion validity. (Wohlin et al. [54])

Internal validity pertains to the validity of results internal to a study. It focuses on the structure of a study and the accuracy of the conclusions drawn. To avoid creating a data set with inaccurate or misleading information for the classification, the corporate employees labeled the employees by fields in the data set. We attempt to use well-known machine learning libraries during the implementation phase to prevent introducing an internal threat that can be brought on by implementation mistakes. All of our classification techniques specifically make use of the Python Sklearn [47] package. Sklearn and NLTK are used to preprocess the text of the issues and Sklearn metrics are used to determine accuracy, precision, and recall. We think that the application that can cause the most internal threat is when allocating Turkish issues to part of speech tags. Since Turkish is not as common as English and is an agglutinative language, it is more difficult to find a highly trained POS tagger library that provides high precision. We decided to use the Turkish pos tagger [59] library by comparing many parameters such as data numbers, accuracy percentages, and usage popularity among many Turkish POS tagger libraries. Turkish pos tagger library includes 5110 sentences and the data set originally belongs to Turkish UD treebank. For 10-fold validation, the accuracy of the model is 95%.

External validity involves the extent to which the results of a study can be applied beyond the sample. We use the data set of five different applications with thousands of issues. These projects use different software languages and various technologies at the front-end and back-end layers, including restful services, communication protocols such as gRPC and WebSocket, database systems, and hosting servers. The issue reports cover a long period of time from 2011 to 2021. However, all the projects we get from the issue reports are mainly concerned with the development of web projects made to run in the browser on the TV. Issues contain many TV-specific expressions, such as application behaviors that occur as a result of pressing a button on the remote or resetting the TV. We make great efforts to ensure that the features we design to prevent external validity concerns are not particular to the data we utilize. For our classification analysis to be applicable in other fields, we believe that it will be sufficient to replicate it using other data sets from various fields.

Construct validity refers to the degree to which a test or experiment effectively supports its claims. The performance of our automated issue assignment system is evaluated using the well-known accuracy metric. We additionally back it up with two other well-known metrics, namely recall, and precision. Tasks in the organization where we use the data set can only be given to one employee, and that employee is also in charge of the sub-tasks that make up the task. This makes assigning the issue report to a single employee group as a binary classification, an appropriate classification method. However, it could be necessary for a business analyst, a product owner, and a software developer to open the same task in different project management or different software teams. For this kind of data set, the binary classification research we conducted is not a suitable approach.

Conclusion validity refers to the extent to which our conclusion is considered credible and accurately reflects the results of our study. All the issue data we used are real issue reports collected from the software development team. We use issue reports in 10 years time span, but according to the information we received from within the company, the turnover rate is low compared to other companies, and especially the team leaders and testers, who usually create the tasks, are generally people who worked for the company for 10+ years. This may have caused a high similarity in the language, namely the text, of the opened tasks and created a conclusion threat at the accuracy rate. To assess how well the accuracy values we find are consistent among themselves, we used statistical significance tests as outlined in Section 4.3. By proving our hypotheses in this manner, we showed the consistency of the outcomes we discovered and the effectiveness of our methods.

5.2 User Evaluation

An employee of the company who served as a Senior Software Developer and created the architecture of the projects where we use the data set as well as an employee who serves as the Team Leader of the three projects we have are interviewed for this section about our application. On all the projects where we use the data set, a software developer has been employed by this company for three years. Three of the projects are being led by the team leader, who has been employed by the company for ten years. After spending about 15 minutes outlining our application, we evaluate the outcomes by conducting a few joint experiments. In the first section, we find an issue that has been done and our model assigns a different assignee value than the one made on ITS, and we talk about it. This issue is assigned to the team leader in ITS, but our model assigns it to the junior software developer. We want to know what team members think about this example of a wrong assignment. Our model assigns it to a different employee, both in terms of experience and field. First off, they state that from the test team or the customer support team, an incorrectly tested issue that is not actually a problem or issues that should not be developed can be opened. In this case, the team manager can take the issue and bring it to Won’t Fix status. This is also the case in this issue. In fact, this is something that should not be done. They state that for such situations, the team manager must decide.

In the second part, we assign an idle issue that has not yet been assigned by our classification method. The model labels the issue as the junior software developer. We are asking for their opinion to find out if this is the correct assignment. Considering the scope of the job, both team members state that it is appropriate to assign this job to a junior friend, as the requirement for seniority is quite low. Assigning it to mid or senior employees would not be a problem either, but they would not consider assigning this issue to more experienced employees.

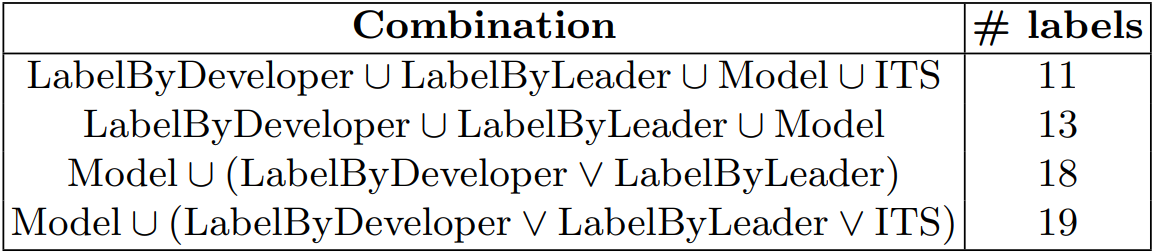

In the next section, we give a data set consisting of 20 issues assigned and closed in ITS to the senior software developer and team leader in the company. They label these issues according to the labels we set. We compare the tags made with the assigned values in the issue’s data set and the assignments made by our best working system. Table 8 shows the results of this comparison.

First, we compare the labels of Senior Software Developer and Team Leader with the assigned values on the ITS and the label values of our best model and find the issues where all four are the same. The least number of intersections are 11 common labels with values where all four of them are the same. In order to understand whether this difference is due to mislabeling of our model or due to labeling differences between employees and ITS, in combination 2, we check the values where the labels made by our model and all three of the labels made by the employees intersect. Here the total intersection turns out to be 13. We show the labels that these two issues are assigned differently on ITS to the employees. Both employees gave the same tag value, but a different employee type seems to have closed the issue in ITS. They think that if it’s a problem that an employee has dealt with before, they may have taken it for that reason and it could be both types of labels. In the third combination, we find the values that our model has labeled in common with at least one employee to see if there are labels that they think differently among the employees or if our model has assigned completely different assignments from the two. Here, the number of common tags increases to 18. We find five issues that two employees tagged differently, and we ask the employees what they think about these differences. After the exchange of ideas between each other, in two of the different tagged issues, the developer thinks that the tag value of the leader is more appropriate, and in the other two, the leader thinks that the tag value of the developer is more accurate. In an issue, they cannot reach a common decision. Finally, we add the values from the ITS to the combination and find the values where our model coincides with at least one of the labels of the two employees and the label from the ITS. Thus, we see that our model and ITS have the same label value with that undecided issue.

In the last section, we direct the questions we prepared to the employees to get an idea about the system. We ask whether they would prefer such a system to be used in business life. They state that if they are converted into an application and the necessary features are added, for example, if an interface is provided where they can enter the current number of personnel, and their experiences, add and remove employees who are currently on leave, and if they turn into a plugin that integrates with the Jira interface, they will want to use it. Afterward, we ask if you find the system reliable and do you trust the assignments made. They say that they cannot completely leave the assignment to the application, and they will want to take a look at the assignments made by the application. The team leader adds that if there is a feature to send his approval, for example, by sending an e-mail before making the appointment, he will take a look and approve it, except for exceptional cases, and his work will be accelerated. As a result of the assignments made with the system, we address the question: Do you think that the average task solution time will decrease? It can reduce the average task resolution time, but they state that they think that if similar tasks are constantly sent to similar employee groups, this may have undesirable consequences for employee happiness and development. Next, we ask if you think using the system will reduce planning time. There are times when they talked at length in team planning meetings about who would get the job and who would be more suitable. At least, they think that if they have a second data, it can be a savior in cases where they are undecided. Finally, we would like to know your suggestions to improve the system. They state that if this system is going to turn into an application, they will want to see the values that the application pays attention to, to be able to edit and remove or add new ones. They think that if it has a comprehensive and user-friendly interface, it will still be suitable for use in business processes.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.