Authors:

(1) Pinelopi Papalampidi, Institute for Language, Cognition and Computation, School of Informatics, University of Edinburgh;

(2) Frank Keller, Institute for Language, Cognition and Computation, School of Informatics, University of Edinburgh;

(3) Mirella Lapata, Institute for Language, Cognition and Computation, School of Informatics, University of Edinburgh.

Table of Links

- Abstract and Intro

- Related Work

- Problem Formulation

- Experimental Setup

- Results and Analysis

- Conclusions and References

- A. Model Details

- B. Implementation Details

- C. Results: Ablation Studies

A. Model Details

In this section we provide details about the various modeling components of our approach. We begin by providing details of the GRAPHTRAILER architecture (Section A.1), then move to discuss how the TP identification network is trained (Section A.2), and finally give technical details about pre-training on screenplays (A.3), and the sentiment flow used for graph traversal (A.4).

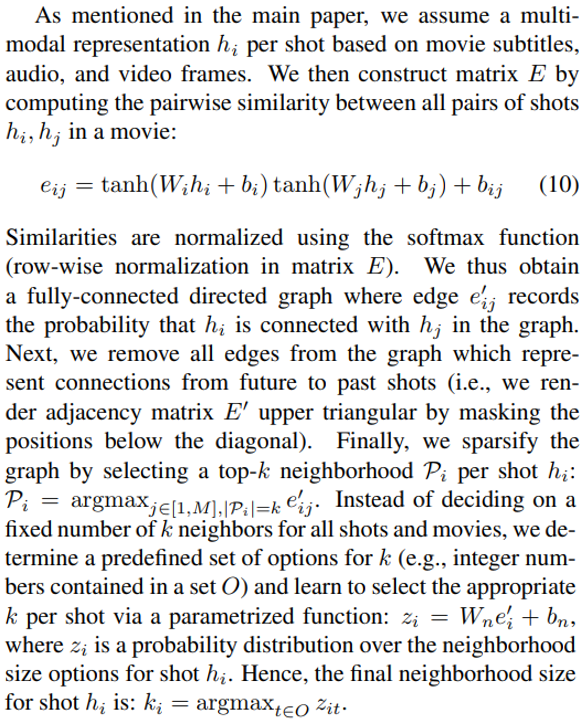

A.1. GRAPHTRAILER

We address discontinuities in our model (i.e., top-k sampling, neighborhood size selection) by utilising the StraightThrough Estimator [7]. During the backward pass we compute the gradients with the Gumbel-softmax reparametrization trick [25, 32]. The same procedure is followed for constructing and sparsifying scene-level graphs in the auxiliary screenplay-based network.

A.2. Training on TP Identification

Section 3 presents our training regime for the video- and screenplay-based model assuming TP labels for scenes are available (i.e., binary labels indicating whether a scene acts as a TP in a movie). Given such labels, our model is trained with a binary cross-entropy loss (BCE) objective between the few-hot gold labels and the network’s TP predictions.

However, in practice, our training set contains silverstandard labels for scenes. The latter are released together with the TRIPOD [41] dataset and were created automatically. Specifically, TRIPOD provides gold-standard TP annotations for synopses (not screenplays), under the assumption that synopsis sentences are representative of TPs. And sentence-level annotations are projected to scenes with a matching model trained with teacher forcing [41] to create silver-standard labels.

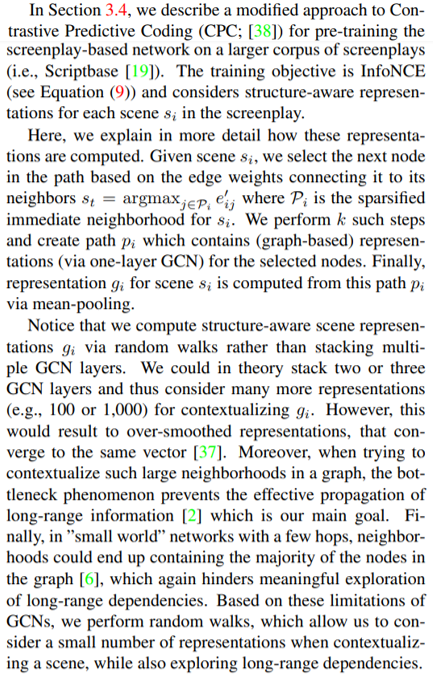

A.3. Self-supervised Pre-training

A.4. Sentiment Flow in GRAPHTRAILER

One of the criteria for selecting the next shot in our graph traversal algorithm (Section 3.1) is the sentiment flow of the trailer generated so far. Specifically, we adopt the hypothesis[9] that trailers are segmented into three sections based on sentiment intensity. The first section has medium intensity for attracting viewers, the second section has low intensity for delivering key information about the movie and finally the third section displays progressively higher intensity for creating cliffhangers and excitement for the movie.

Accordingly, given a budget of L trailer shots, we expect the first L/3 ones to have medium intensity without large variations within the section (e.g., we want shots with average absolute intensity close to 0.7, where all scores are normalized to a range from -1 to 1). In the second part of the trailer (i.e., the next L/3 shots) we expect a sharp drop in intensity and shots within this section to maintain more or less neutral sentiment (i.e., 0 intensity). Finally, for the third section (i.e., the final L/3 shots) we expect intensity to steadily increase. In practice, we expect the intensity of the first shot to be 0.7 (i.e., medium intensity), increasing by 0.1 with each subsequent shot until we reach a peak at the final shot.

This paper is available on arxiv under CC BY-SA 4.0 DEED license.

[9] https : / / www . derek - lieu . com / blog / 2017 / 9 / 10 / the - matrix - is - a - trailer - editors-dream