Authors:

(1) Nir Chemaya, University of California, Santa Barbara and (e-mail: [email protected]);

(2) Daniel Martin, University of California, Santa Barbara and Kellogg School of Management, Northwestern University and (e-mail: [email protected]).

Table of Links

- Abstract and Introduction

- Methods

- Results

- Discussion

- References

- Appendix for Perceptions and Detection of AI Use in Manuscript Preparation for Academic Journals

2 Methods

In order to answer our main research questions, we split our design into two parts. The first part was a survey of the academic community that captured academics’ views on reporting AI use in manuscript preparation. The second part was to test an AI detector’s reaction to AI use in manuscript preparation. Our primary focus was on using AI to fix grammar and rewrite text, which we consider to be important intermediate cases between not using AI at all and using AI to do all of the writing based on limited inputs (e.g., only a title and/or a set of results). We asked academics for their perceptions about these two kinds of AI usage in manuscript preparation and investigated how the detector responded to these different kinds of usage. All of this will be explained in more detail in the following subsections.

2.1 Survey Design

Beginning August 22nd 2023 and running until September 20th 2023, we conducted a brief unpaid survey about perceptions of AI use in manuscript preparation. We sent this survey to a convenience sample of academics; specifically, we sent it to three listservs: the UCSB Economic Department listserv (connecting graduate students, professors, and postdocs at the University of California, Santa Barbara); the Economics Science Association (ESA) announcement listserv (ESA is the leading organization for experimental economics); and the Decision Theory (DT) Forum listserv (the DT Forum is a listserv of academics working on decision theory that is run by Itzhak Gilboa). We sent the survey with a few days delay between each group, which allows for a rough measure of the respondents from each source. We had a total of 271 respondents complete the survey: 38 from UCSB, 199 from UCSB and the ESA community, and 34 from UCSB, the ESA community, and the DT Forum (this number does not include 20 individuals who did not specify an academic role). The median time to answer the survey was 1.21 minutes, and survey respondents provided informed consent (as shown in Appendix A).

In this survey, we focused on two main aspects related to perceptions of AI use in manuscript preparation. The first aspect is whether authors should acknowledge using ChatGPT to fix grammar or to rewrite text. We asked this over two separate questions, as shown in Figure 10 in Appendix A. These two uses of ChatGPT reflect to many of the use cases of LLMs suggested in articles, websites, and online courses. The second aspect is whether using ChatGPT to modify an academic manuscript is unethical. Again, we split this into two questions – one for grammar and one for rewrite – to allow researchers to address whether ethics also depends on usage. Because we suspected that there might be differences in these perceptions and an academic’s role and language background, we asked respondents if they were a native speaker of English and their current role (postdoc, student, untenured professor, tenured professor, and/or other). Of the 271 respondents who completed our survey, 83 reported being a native English speaker, and we categorized 67 respondents as students, 32 as postdocs, 59 as untenured professors, and 113 as tenured professors. If someone reported multiple roles, we took the “highest” report, ranked in this order.

In addition to these questions, we added follow-up questions on a second page of the survey about whether authors should acknowledge other writing services and tools such as Word, Grammarly, proofreading, and the work of research assistants (see Figure 11 in Appendix A). We asked this question only after the ChatGPT questions and on a new page because our main focus was the perception of AI (e.g., ChatGPT) in academic writing, and because we wanted to avoid these followup questions influencing the answers given about ChatGPT. In addition, we did not randomize the order between ChatGPT and other services because the convenience sample already knew this was a survey about ChatGPT, so that would have been in their mind already when completing the survey. However, by asking questions about ChatGPT first, we might have reduced differences in reported perceptions with other writing services, as people might feel the need to report consistently for a given use.

To make the questions about other sources comparable the questions about ChatGPT, we used the same question format and split the comparison into two groups: fixing grammar and rewriting text. The tools we included for fixing grammar included Word, Grammarly, and RA help. Importantly, we wrote explicitly in the question that these tools were to be used for fixing grammar. For rewriting text, we used proofreading and RA help. Once more, we explicitly asked whether authors should acknowledge using these tools and services for rewriting text for an academic journal.

2.2 Detection Design

Next, we tested how an AI detector would react to using ChatGPT to fix grammar in academic text and to rewrite academic text. We first collected titles and abstracts from 2,716 papers published in the journal Management Science from January 2013 to September 2023. We excluded articles with the following words in their titles, as they did not appear to be original articles: “Erratum,” “Comment on,” “Management Science,” “Reviewers and Guest Associate Editors,” and “Reviewers and Guest Editors.” We intentionally included papers that were published before the launch of ChatGPT in November 30, 2022 in order to have source text that was plausibly unimpacted by LLM use.

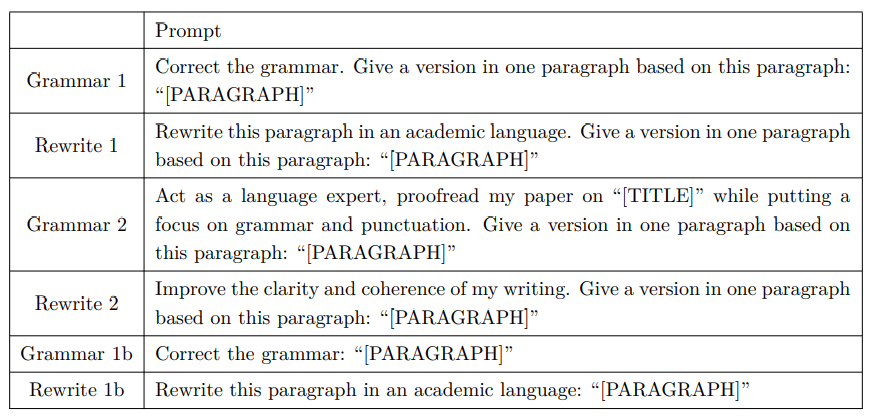

We revised these abstracts using a variety of prompts, as seen in Table 1 below. Rather than selecting the prompts ourselves, we wanted to use an external source. We decided to use prompts from the online GitHub page ChatGPT Prompts for Academic Writing, which advises researchers on how to use ChatGPT. We chose this source because it was the first link returned from a Google search of “ChatGPT Prompts for Academic Writing.”

Our baselines prompts were “Grammar 1” and “Rewrite 1,” and for robustness we also considered two functionally related prompts, “Grammar 2” and “Rewrite 2.” We added a restriction at the end of these prompts to “Give a version in one paragraph based on this paragraph” in order to meet the requirement that the abstract be only one paragraph in length. To consider the robustness of our results to this additional language, we also ran versions of our baseline prompts that did not include it: “Grammar 1b” and “Rewrite 1b.”

It has been shown that there are ways to fool certain detectors, such as by adding strange characters to the text, and this is a constantly evolving game of cat-and-mouse as detectors evolve. Although this could be of interest for researchers, we do not study this particular phenomenon. Instead, it is our goal to see whether an academic who uses ChatGPT for widely-proposed purposes – without further edits or detection avoidance strategies – would have their writing be flagged as AI generated.

To revise these abstracts at scale, we leveraged the GPT API with settings that would produce output that closely mirrors the output of a researcher using ChatGPT to revise academic writing. The specific model we used was gpt-3.5-turbo-0613 (GPT-3.5 Turbo released in June 13 2023), and we kept the default settings for temperature, top p, frequency penalty, and presence penalty. We also used the default system prompt, “You are a helpful assistant,” which is a background prompt that can be changed in the API but not in ChatGPT itself. We did a fresh call each time we used a prompt to avoid learning and history effects.

Finally, we used a leading paid service (Originality.ai) to see how AI detection algorithms might react to this use of LLMs. We first evaluated the original Management Science abstracts, and then the abstracts revised by GPT-3.5 based on all of the prompts. Originality.ai provides an “AI score,” which is a value between 0% and 100% that is interpreted as the likelihood that AI wrote the text being evaluated. A high score means a high likelihood that AI generated the text. Specifically, the company states: “If an article has an AI score of 5%... there is a 95% chance that the article was human-generated (NOT that 5% of the article is AI generated).” Akram (2023) studied a number of popular detection tools and found that Originality.ai had the highest accuracy rate (97%).

This paper is available on arxiv under CC 4.0 license.