Authors:

(1) Omid Davoodi, Carleton University, School of Computer Science;

(2) Shayan Mohammadizadehsamakosh, Sharif University of Technology, Department of Computer Engineering;

(3) Majid Komeili, Carleton University, School of Computer Science.

Table of Links

Interpretability of the Decision-Making Process

The Effects of Low Prototype Counts

Background Information

HIVE

HIVE[12] (Human Interpretability of Visual Explanations) is a framework for human evaluation of interpretability in AI methods that use visual explanations. HIVE uses questionnaires to ask humans to rate multiple aspects of the interpretability of AI methods. These include similarity, agreement, and distinction tests.

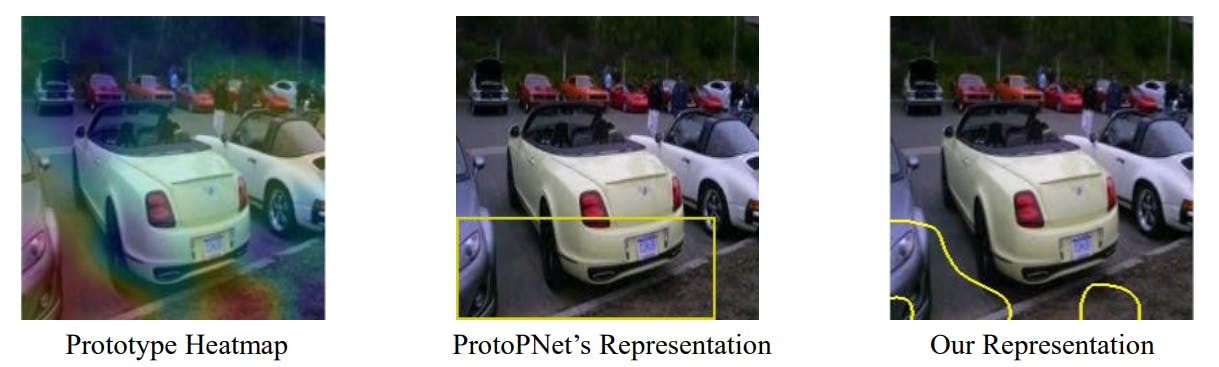

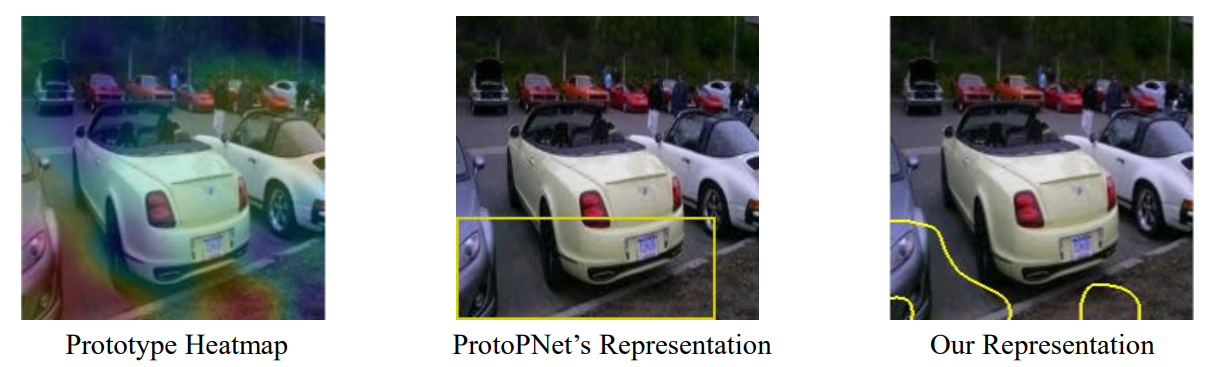

There are, however, some problems with the question design of the HIVE framework that makes it flawed for the purpose of assessing interpretability for prototype-based methods. In particular, they ask the human participants to rate multiple criteria in a fine-grained manner. This practice has been shown to be unreliable because human opinion can differ greatly when asked to quantify vague concepts[13]. HIVE also assumes that every bit of explanation is interpretable by itself, even though there are examples showing the opposite (see Figure 2-a). Finally, HIVE does not follow the same process that is used by the AI model to classify a query sample. It splits the prototypes into class categories when showing them to human participants and hides the activation values. This is completely opposite of the way many of the part-prototype methods operate. Our proposed method does not suffer from these deficiencies.

ProtoPNet

ProtoPNet[9] is a neural-network-based model that utilizes the similarity between parts of an input to a number of part-prototypes to classify images. The image first goes through a backbone network, usually a pre-trained image network such as ResNet consisting of multiple convolutional layers, and is turned into a spatially related matrix of embeddings. These embeddings are usually obtained in the penultimate layers of the common pre-trained networks. The distance between the closest of each embedding to each prototype is then fed into a fully connected output layer with softmax to determine the final class.

The resulting network is end-to-end trainable via gradient descent. A weighted sum of multiple loss functions is used to train the model. These losses include Cross-entropy loss for the final classification loss, as well as a Clustering loss designed to make sure all query samples have at least one prototype that is close to some part of them. There is also a Separation loss designed to make sure samples are far from prototypes of other classes.

SPARROW

SPARROW[17] is a method based on ProtoPNet that aims to make sure that the prototypes are semantically coherent. In principle, it aims to enforce sparsity, narrowness of scope and uniqueness to the prototypes. It does so by adding two additional components to the loss equation. The first one tries to decrease angular similarity between prototypes themselves to make sure they are unique, and the second one tries to make sure the prototypes themselves are as close as possible to at the query samples in the embedding space of the model in the hope of making the scope of the prototypes narrower.

Deformable ProtoPNet

Deformable ProtoPNet[18] is another method that aims to overcome some of the issues of the original. In this case, the rigidity of the prototypes in ProtoPNet. The prototypes in ProtoPNet are vectors with a spatially rigid semantic meaning in the pixel space. Deformable ProtoPNet aims to remedy that by creating spatially deformable prototypes where the position of features within each prototype can be different while still encoding the same concept.

ProtoTree

By leveraging the interpretability of the decision trees, a new prototype-based model was introduced called the Neural Prototype Tree (ProtoTree)[19]. A ProtoTree consists of a Convolutional Neural Network followed by a soft binary tree structure with learnable prototypes in its decision nodes. The distance between the nearest latent patch and a prototype determines to what extent the prototype is present anywhere in an input image, which influences the routing of the image through the corresponding node. As it uses a soft decision tree, a query sample is routed through both children, each with a certain weight. After that, the model makes its prediction based on the taken paths to all leaves and their class distributions. However, the authors claim that a trained soft ProtoTree can convert into a hard ProtoTree without losing accuracy. In contrast with the ProtoPNet with a linear bag-of-prototypes, the ProtoTree enforces a sequence of steps and supports negative associations. Consequently, it can drastically reduce the number of prototypes.

TesNet

Another interpretable network architecture was designed by introducing a plug-in transparent embedding space (TesNet)[20] with basis concepts constructed on the Grassmann manifold. Concerning ProtoPNet, the authors mention two main disadvantages. First, the prototypes are implicitly assumed to follow a Gaussian distribution, which is improper for the complex data structure. Moreover, it cannot explicitly ensure that the learned prototypes are disentangled, an essential property for interpretable learning. The TesNet model was designed to alleviate such problems by proposing a transparent embedding space. In terms of architecture, the prototype layer in the ProtoPNet is replaced by a Transparent Subspace layer with "basis concepts". This layer covers as many subspaces as the number of classes, and each subspace is spanned by M basis concepts. The M within-class concepts are assumed orthogonal to each other, and each concept can be traced back to the high-level patches of the feature map. To this end, it defines rigorous losses. They include orthogonality of within-class concepts, subspace separation, high-level patche grouping, and concept-based classification loss.

ProtoPool

After ProtoTree and TesNet, ProtoPool[21] was introduced. The model is said to have mechanisms that reduce the number of prototypes and obtain higher interpretability and easier training. The first is achieved through prototype sharing, the second comes from defining a novel similarity function, and the third results from employing a soft assignment based on prototype distributions to optimally use prototypes from the pool. The prototypes must focus on a salient visual feature, not the background. For this purpose, instead of maximizing the global activation, they widen the gap between the maximal and average similarity between the image activation map and prototypes. The other two advantages come from having a pool of prototypes and learning distributions of them per class. Therefore, the architecture is very similar to ProtoPNet, with some changes in the prototype pool layer, which contribute to prototype distribution and applying the new focal similarity function.

ACE

ACE[22] is an unsupervised method for extracting visual concepts from a dataset. These concepts are small regions of the images that seem to be important identifiers of the subjects of the pictures. To find these concepts, first, the images are segmented into small pieces, and then each piece is fed to a state-of-the-art classifier network. The output of the bottleneck layer of this network is then used as a representation of the concept present in the segment. Clustering methods are then utilized to find important clusters within these representations which are then picked as important concepts for the dataset.

Datasets Used

We used three datasets in our experiments. The datasets used had to deal with concepts that were broadly understandable to the average person. Note that it is not necessary for a human to be able to distinguish between the classes within a dataset (for example, distinguishing between different species of birds). The only requirement is for people to understand the general concept of the subjects of the image (For example, knowing what a bird is).

CUB-200-2011

CUB-200-2011[23] is a dataset of birds consisting of 11988 total images that are split into 200 classes. This is a popular dataset in part-prototype network research. It also depicts subjects that the average human can understand. For our purposes, we split the dataset into two halves with 6194 images for training and 5994 images for testing. The reason for such a drastic split is that the augmentation method we used (which is the same as the one used for ProtoPNet and many of the other derived methods) multiplies the number of training images by a factor of 30.

Stanford Cars Dataset

The Stanford Cars Dataset[24] is an image dataset consisting of 196 different models of cars. They are split into 8339 training and 8237 test images. This is another dataset where the subjects are generally well understood by an average human and thus, was a good pick for our experiments. The same augmentation process was also done on this dataset except that there are no bounding box coordinates available for this dataset. As a result, the images were not cropped to the subjects.

ImageNet Subset

ImageNet[25] is a very diverse image dataset containing a hierarchy of classes. We chose 7 classes out of these and created a subset that we believed was understandable by the average human while also containing similar concepts and a relatively balanced number of samples per class. These classes were "Big Cats", "Collie breeds of dogs", "Domestic Cats", "Foxes", "Swiss Mountain Dogs", "Wild Cats" and "Wolves". It contains 16586 training and 1686 test images. The same augmentation process was also done on this dataset.

This paper is available on arxiv under CC 4.0 license.