Authors:

(1) Kun Lan, University of Science and Technology of China;

(2) Haoran Li, University of Science and Technology of China;

(3) Haolin Shi, University of Science and Technology of China;

(4) Wenjun Wu, University of Science and Technology of China;

(5) Yong Liao, University of Science and Technology of China;

(6) Lin Wang, AI Thrust, HKUST(GZ);

(7) Pengyuan Zhou, University of Science and Technology of China.

Table of Links

3. Method and 3.1. Point-Based rendering and Semantic Information Learning

3.2. Gaussian Clustering and 3.3. Gaussian Filtering

4. Experiment

4.1. Setups, 4.2. Result and 4.3. Ablations

4. EXPERIMENT

4.1. Setups

Due to the scarcity of 3D Gaussian segmentation methods and the lack of open source code for Gaussian Grouping [7] and SAGA [8], we chose to compare our method with previous NeRF segmentation methods [10]. For this purpose, we selected well-known NeRF datasets for our experiments, including LLFF [24], NeRF-360 [5], and Mip-NeRF 360 [25].

Both LLFF and NeRF-360 are centered on objects in the scene, with the difference that the camera viewpoint of the former varies in a small range, while the latter contains a 360° image around the object. Mip-NeRF 360 features an unbounded scene, and its camera viewpoint also varies in a large range. In the Gaussian Clustering stage, the probability threshold β of each 3D Gaussian is set at 0.65, while the 50 3D Gaussians closest to its distance are filtered for subsequent computation. The 3D Gaussians are built and trained on a single Nvidia Geforce RTX 3090 GPU.

4.2. Result

Fig. 2 illustrates the segmentation effects of this method in various scenes. The first two rows demonstrate the segmentation performance when the camera position varies within a small range. The third row depicts the segmentation effect in a 360° scene. The final row highlights the results of multiobject segmentation, where distinct objects such as the TV, desk, and table are segmented separately. Our method’s efficiency is enhanced by the addition of object code, a simple yet effective tool for handling complex scenes. In the first row, this code enables the successful removal of complex background elements like the leaves behind the flower. In the third row, it ensures accurate segmentation even when there is a significant change in the viewing angle. Moreover, the object code, which encapsulates the probability distribution of the 3D Gaussian across all classes, facilitates the simultaneous segmentation of multiple objects in a scene.

Fig. 3 illustrates the comparative results between our method and ISRF [10]. Owing to the explicit representation of 3D Gaussians, our segmentation results are more precise in detail compared to those of ISRF, which is particularly evident in the leaf section of Fig. 3.

4.3. Ablations

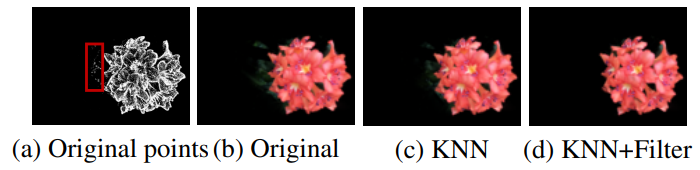

Fig. 4 presents the results of the ablation experiments, clearly demonstrating the effectiveness of KNN clustering and statistical filtering. Fig. 4(b) shows the initial segmented foreground object obtained without KNN clustering or statistical filtering, where it is noticeable that some leaves behind the flower are erroneously segmented. Fig. 4(c) displays the segmented foreground image after KNN clustering. Since KNN primarily addresses Gaussians with ambiguous semantic information, its impact on the visualization result is minimal. However, it can be observed that some incorrectly segmented Gaussians have been removed. Finally, Fig. 4(d) shows the result obtained after applying both KNN clustering and statistical filtering, which successfully filters out those Gaussians that were incorrectly segmented.

This paper is available on arxiv under CC 4.0 license.