(1) Feng Liang, The University of Texas at Austin and Work partially done during an internship at Meta GenAI (Email: [email protected]);

(2) Bichen Wu, Meta GenAI and Corresponding author;

(3) Jialiang Wang, Meta GenAI;

(4) Licheng Yu, Meta GenAI;

(5) Kunpeng Li, Meta GenAI;

(6) Yinan Zhao, Meta GenAI;

(7) Ishan Misra, Meta GenAI;

(8) Jia-Bin Huang, Meta GenAI;

(9) Peizhao Zhang, Meta GenAI (Email: [email protected]);

(10) Peter Vajda, Meta GenAI (Email: [email protected]);

(11) Diana Marculescu, The University of Texas at Austin (Email: [email protected]).

Table of Links

- Abstract and Introduction

- 2. Related Work

- 3. Preliminary

- 4. FlowVid

- 4.1. Inflating image U-Net to accommodate video

- 4.2. Training with joint spatial-temporal conditions

- 4.3. Generation: edit the first frame then propagate

-

- Experiments

- 5.1. Settings

- 5.2. Qualitative results

- 5.3. Quantitative results

- 5.4. Ablation study and 5.5. Limitations

- Conclusion, Acknowledgments and References

- A. Webpage Demo and B. Quantitative comparisons

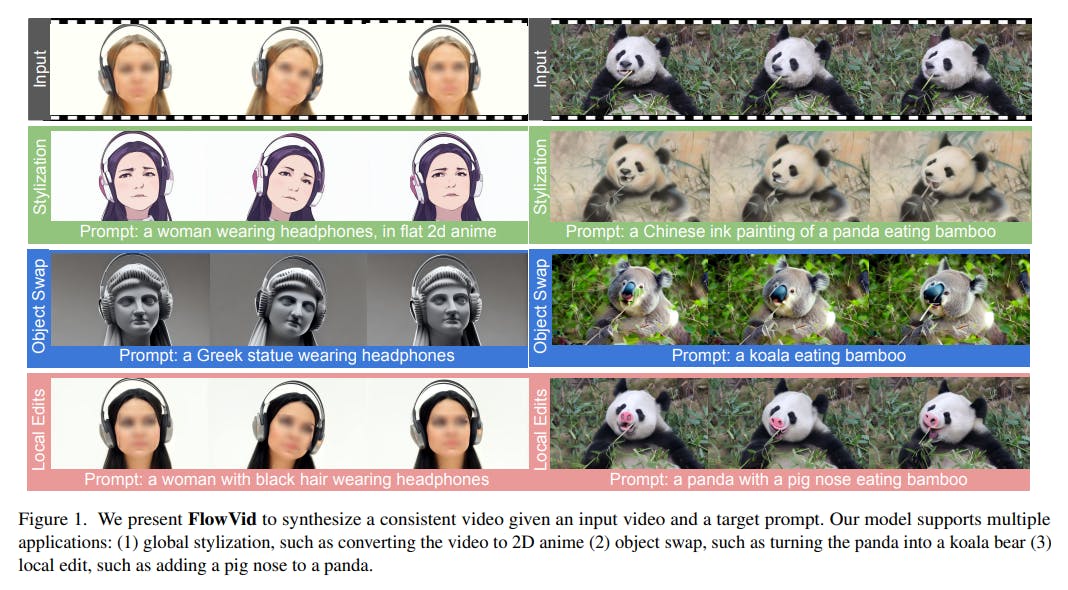

4.2. Training with joint spatial-temporal conditions

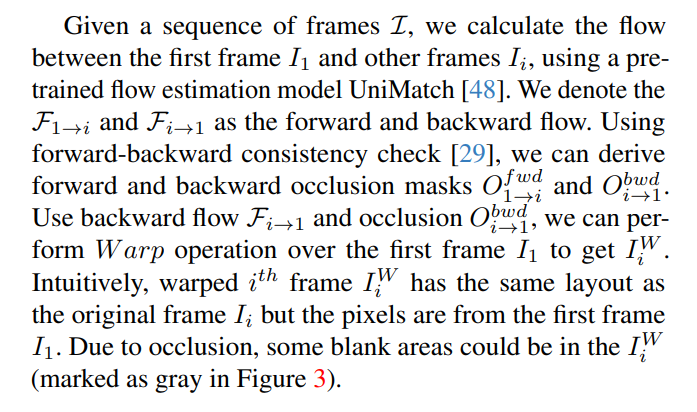

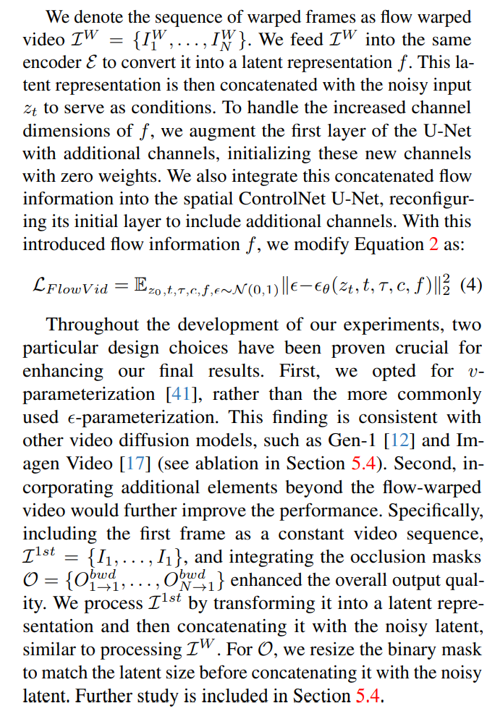

Upon expanding the image model, a straightforward method might be to train the video model using paired depth-video data. Yet, our empirical analysis indicates that this leads to sub-optimal results, as detailed in the ablation study in Section 5.4. We hypothesize that this method neglects the temporal clue within the video, making the frame consistency hard to maintain. While some studies, such as Rerender [49] and CoDeF [32], incorporate optical flow in video synthesis, they typically apply it as a rigid constraint. In contrast, our approach uses flow as a soft condition, allowing us to manage the imperfections commonly found in flow estimation.

This paper is available on arxiv under CC 4.0 license.